Recently, on this blog, I've been musing about our foundational assumptions and how they color our thinking (for example, see here and here). In my last post, I briefly described a situation where this has affected my lab. In one of our computational studies, our model explained the experimental data better when two straightforward assumptions were made (see below). The problem was, one of those assumptions implied that Cactus can be found in the nucleus, even though it has always been conceived as a strictly cytoplasmic protein. This baseline conception colored some people's reaction so strongly as to reject our assumption, even though it was plausible.

The question I therefore raised at the end of the last post was whether our two assumptions subsequently needed to be validated experimentally? I am itching to get to that question, but before we address it, we should walk through what those two assumptions were and what experimental data are explained by the model. However, if you don't wish to read those details, here is a brief summary: The

first assumption was not only straightforward, but it also provided an obvious way to match known data. The second assumption was more

plausible than its denial, and resulted in a model that answered a

problem with our understanding of the Dorsal gradient. Now, on to the details:

First assumption: After mitosis, when the nuclear envelope reforms, the contents of the nucleus (accidentally) reflect the contents of the surrounding cytoplasm. In other words, when the nuclear envelope "encloses" the nucleus, whatever protein-sized molecules happen to be in the cytoplasm at that time can get enclosed as well.

The reason why we made this assumption is that, as soon as interphase begins, there is fluorescence in the nuclei. We saw that in live embryos expressing a Dorsal-GFP tag (see Reeves et al., 2012). There are further details to this, but suffice it to say that a simple way to solve this problem is if nuclei at the start of interphase do not begin empty.

Second assumption: If our first assumption was correct, and the nuclei do not begin interphase empty, then a chain of important implications ensues. The first implication is that both Cactus and Dorsal/Cactus complex could reside in the nucleus. That in turn implied that it is possible that some of the fluorescence we measure, either in live Dorsal-GFP embryos, or in fixed embryos fluorescently immunostained against Dorsal, originated from Dorsal/Cactus complex. And this implied that our fluorescent measurements were not of the active Dorsal gradient, but of the total Dorsal gradient (free Dorsal + Dorsal/Cactus complex). At this point, the only way to infer the active Dorsal gradient is to use our computational model.

The reason why we made this assumption was that it seemed more obvious than its denial. However, this second assumption resulted in a large boon for our model: it now was able to correctly predict the expression of genes that depend on Dorsal signaling. Without going into too much detail, we have known since 2009 that the Dorsal gradient is too narrow to express genes like sog and dpp, which have borders roughly 50% of the way around the embryo (see here for more explanation). However, our model predicted the active Dorsal gradient (as opposed to the measured, total Dorsal gradient) may indeed be broad enough to place the sog and dpp gene expression borders.

Now that we have the details of our assumptions and implications in hand, in the next post I will discuss why I no longer think experimental validation of our assumptions is necessary.

Tuesday, December 12, 2017

Monday, December 4, 2017

Validating assumptions

Oftentimes, our interpretations of our experimental data are dependent on the assumptions that we bring to the table. I have previously discussed the problem of holding unexamined assumptions (see here and here), and how they can pervade our thinking. Here I want to ask the question of how we would validate our assumptions? In particular, if we have a model that is built on assumptions, what kind of data count as evidence in favor of the model and its assumptions?

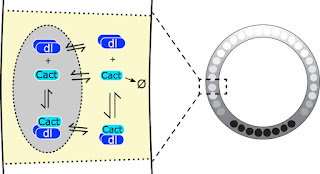

This is a pertinent question for me because, in my lab, we do both modeling and experiment, and sometimes our modeling work is not trusted in the same way that experimental results would be. We had a paper a couple of years ago (O'Connell and Reeves, 2015) that made a few new assumptions about the Dorsal/Cactus system (see here for a short intro to that system), which resulted in a much better explanation of past data, but it also resulted in a slightly novel way of looking at the system (see Figure). Specifically, we suggested that not just free Dorsal, but also Dorsal/Cactus complex and free Cactus could enter the nuclei.

This idea had not been proposed before (at least, not at this stage in Drosophila development), and reviewers were suspicious. We even had a reviewer of a subsequent manuscript try to reject that manuscript because of the assumptions we made in the 2015 paper!

Now, given that we have a wet lab, one way to test our assumptions would be to simply perform well designed experiments. But sometimes, that's easier said than done. In this particular case, because Cactus is difficult to image (both in fixed embryos, but also in live imaging), all of the proposed experiments would be indirect validations of our results, at best.

So the question is: do we actually need to validate these assumptions? That's not as radical of a question as it might sound at first. I am basically asking: weren't the assumptions already validated by the fact that the model provided a better explanation of previous data? This the question that we'll begin to look at next time.

|

| In the early Drosophila embryo, Dorsal (Dl) is present at high levels on the ventral side (black nuclei on right). This gradient is established by the action of Toll signaling on the ventral and lateral parts of the embryo (not shown) causing Cactus (Cact), the inhibitor for Dorsal, to split up from Dorsal and be degraded (left). Previously, it had not been proposed that Cactus or Dorsal/Cactus complex could enter the nuclei (left). For more on Dorsal, Cactus, and Toll, see here. |

This idea had not been proposed before (at least, not at this stage in Drosophila development), and reviewers were suspicious. We even had a reviewer of a subsequent manuscript try to reject that manuscript because of the assumptions we made in the 2015 paper!

Now, given that we have a wet lab, one way to test our assumptions would be to simply perform well designed experiments. But sometimes, that's easier said than done. In this particular case, because Cactus is difficult to image (both in fixed embryos, but also in live imaging), all of the proposed experiments would be indirect validations of our results, at best.

So the question is: do we actually need to validate these assumptions? That's not as radical of a question as it might sound at first. I am basically asking: weren't the assumptions already validated by the fact that the model provided a better explanation of previous data? This the question that we'll begin to look at next time.

Subscribe to:

Posts (Atom)